Batch Processing API

Warning: This API is deprecated and will be removed in future versions. Please migrate to the Batch Processing V2 API as soon as possible.

Batch Processing API is only available for enterprise users. If you don't have an enterprise account, and would like to try it out, contact us for a custom offer.

Batch Processing API (or shortly "Batch API") enables you to request data for large areas and/or longer time periods for any Sentinel Hub supported collection, including BYOC (bring your own data).

It is an asynchronous REST service. This means that data will not be returned immediately in a request response but will be delivered to your object storage, which needs to be specified in the request (e.g. S3 bucket, see AWS S3 bucket settings below). The processing results will be divided in tiles as described below.

Workflow

The batch processing API comes with the set of REST APIs which support the execution of various workflows. The diagram below shows all possible statuses of a batch processing request (CREATED, ANALYSING, ANALYSIS_DONE, PROCESSING, DONE, FAILED, CANCELED, PARTIAL) and user's actions (ANALYSE, START, RESTART, CANCEL) which trigger transitions among them.

The workflow starts when a user posts a new batch processing request. In this step the system:

- creates new batch processing request with status

CREATED, - validates the user's inputs, and

- returns an estimated number of output tiles that will be processed.

User can then decide to either request an additional analysis of the request, start the processing or cancel the request. When additional analysis is requested:

- the status of the request changes to

ANALYSING, - the evalscript is validated,

- a list of required tiles is created, and

- the request's cost is estimated, i.e. the estimated number of processing units (PU) needed for the requested

processing.

Note that in case of

ORBITorTILEmosaicking the cost estimate can be significantly inaccurate, as described below. - After the analysis is finished the status of the request changes to

ANALYSIS_DONE.

If the user chooses to directly start processing, the system still executes the analysis but when the analysis is done it automatically starts with processing. This is not explicitly shown in the diagram in order to keep it simple.

The user can now request a list of tiles for their request, start the processing, or cancel the request. When the user starts the processing:

- the estimated number of PU is reserved,

- the status of the request changes to

PROCESSING(this may take a while), - the processing starts.

When the processing finishes, the status of the request changes to:

FAILEDwhen all tiles failed processing,PARTIALwhen some tiles were processed and some failed,DONEwhen all tiles were processed.

Although the process has built-in fault tolerance, occasionally, tile processing may fail. In this case, the batch request ends up in status PARTIAL and user can request restart its processing as shown in this example. This will restart processing of all FAILED tiles.

Evalscript validation

During analysis your evalscript will be validated against a portion of your selected area of interest. With SIMPLE mosaicking, your evalscript will only be evaluated against one scene. With ORBIT or TILE mosaicking, your evalscript will be evaluated against any number of possible scenes. That also includes a case with zero scenes (when no orbit or tile is available). Your evalscript must therefore be able to handle all such cases, even if your specific request does not contain such a case.

Example of a request that handles such cases:

function evaluatePixel(S2L2A) {let sum = 0for (var i = 0; i < S2L2A.length; i++){sum += S2L2A[i].B02}return sum;}

As can be seen in the case above. The array represents the S2L2A scenes that are available. In the case of zero scenes, the for loop is not entered, ensuring the script also works with zero scenes.

Canceling the request

User may cancel the request at any time. However:

- if the status is

ANALYSING, the analysis will complete, - if the status is

PROCESSING, all tiles that have been processed or are being processed at that moment are charged for. The remaining PUs are returned to the user.

Automatic deletion of stale data

Stale requests will be deleted after some time. Specifically, the following requests will be deleted:

- failed requests (request status

FAILED), - requests that were created but never started (request statuses

CREATED,ANALYSIS_DONE), - successful requests (request statuses

DONEandPARTIAL) for which it was not requested to add the results to your collections. Note that only such requests themselves will be deleted, while the requests' result (created imagery) will remain under your control in your S3 bucket.

Cost estimate

The cost estimate, provided in the analysis stage, is based on the rules for calculating processing units.

It takes the number of output pixels, the number of input bands, and the output format into account. However, for mosaicking ORBIT or TILE the number of data samples (i.e. the no. of observations available in the requested time range) can not be calculated accurately during the analysis. Our cost estimate is thus based on the assumption that one data sample is available every three days within the requested time range.

For example, we assume 10 available data samples between 1.1.2021 and 31.1.2021. If you request batch processing of more/fewer data samples, the actual cost will be proportionally higher/lower.

The actual costs can be significantly different from the estimate if:

- the number of data samples is reduced in your evalscript by

preProcessScenesfunction or by filters such asmaxCloudCoverage. The actual cost will be lower than the estimate. - your AOI (area of interest) includes large areas with no data, e.g. when requesting Sentinel-2 data over oceans. The actual cost will be lower than the estimate.

- you request processing of data collections with revisit period shorter/longer than three days (e.g. your BYOC collection). The actual cost will be proportionally higher/lower than the estimate. Revisit period depends also on selected AOI, e.g. the actual costs of processing Sentinel-2 data close to the equator/at high latitudes will be lower/higher than the estimate.

If you know how many data samples per pixel will be processed, you can adjust the estimate yourself. For example, if you request processing for data that is available daily, the cost will be 3 times higher than our estimate.

Note that the cost estimate does not take the multiplication factor of 1/3 for batch processing into account. The actual costs will be 3 times lower than the estimate.

Tile status

Users can follow the progress of tile processing by checking for their current status. This can be done directly in Dashboard, or via the API. The statuses are as follows:

- In the analysis phase, tiles are created with status PENDING.

- When tiles move into scheduling queue, their status changes to SCHEDULED.

- When a tile is pulled from the queue and processing starts, it becomes PROCESSING.

- When tile processing succeeds/fails, it's DONE/FAILED.

- If a tile gets stuck, it goes back into PENDING up to twice. If it gets stuck the third time, it becomes a FAILED tile.

- When a batch request with status PARTIAL is restarted, all its FAILed tiles go back into PENDING.

Tiling grids

For more effective processing we divide the area of interest into tiles and process each tile separately. While process API uses grids which come together with each datasource for processing of the data, the batch API uses one of the predefined tiling grids. The predefined tiling grids 0-2 are based on the Sentinel-2 tiling in WGS84/UTM projection with some adjustments:

- The width and height of tiles in the original Sentinel 2 grid is 100 km while the width and height of tiles in our grids are given in the table below.

- All redundant tiles (i.e. fully overlapped tiles) are removed.

All available tiling grids can be requested with (NOTE: To run this example you need to first create an OAuth client as is explained here):

url = "https://services.sentinel-hub.com/api/v1/batch/tilinggrids/"response = oauth.request("GET", url)response.json()

This will return the list of available grids and information about tile size and available resolutions for each grid. Currently, available grids are:

| name | id | tile size | resolutions | coverage | output CRS | download the grid [zip with shp file] ** |

|---|---|---|---|---|---|---|

| UTM 20km grid | 0 | 20040 m | 10 m, 20 m, 30m*, 60 m | World, latitudes from -80.7° to 80.7° | UTM | UTM 20km grid |

| UTM 10km grid | 1 | 10000 m | 10 m, 20 m | World, latitudes from -80.6° to 80.6° | UTM | UTM 10km grid |

| UTM 100km grid | 2 | 100080 m | 30m*, 60 m, 120 m, 240 m, 360 m | World, latitudes from -81° to 81° | UTM | UTM 100km grid |

| WGS84 1 degree grid | 3 | 1 ° | 0.0001°, 0.0002° | World, all latitudes | WGS84 | WGS84 1 degree grid |

| LAEA 100km grid | 6 | 100000 m | 40 m, 50 m, 100 m | Europe, including Turkey, Iceland, Svalbald, Azores, and Canary Islands | EPSG:3035 | LAEA 100km grid |

| LAEA 20km grid | 7 | 20000 m | 10 m, 20 m | Europe, including Turkey, Iceland, Svalbald, Azores, and Canary Islands | EPSG:3035 | LAEA 20km grid |

* The 30m grid is only available on the us-west-2 deployment to provide an appropriate option for the Harmonized Landsat Sentinel collection. It is not recommended for "regular" Landsat collections as the pixel placement of those is slightly shifted relative to the output grid, therefore data interpolation will occur.

** The geometries of the tiles are reprojected to WGS84 for download. Because of this and other reasons the geometries of the output rasters may differ from the tile geometries provided here.

To use 20km grid with 60 m resolution, for example, specify id and resolution parameters of the tilingGrid object when creating a new batch request (see an example of full request) as:

{..."tilingGrid": {"id": 0,"resolution": 60.0},...}

Contact us if you would like to use any other grid for processing.

Batch collection

Batch processing results can also be uploaded into a BYOC-like collection, which makes it possible to:

- Access data with Processing API, by using the collection ID

- Create a configuration with custom layers

- Make OGC requests to a configuration

- View data in EO Browser

The users can either upload data to an existing batch collection by specyfing the collectionId, or create a new one by using the createCollection parameter.

Read about both options in BATCH API reference.

When creating a new batch collection, one has to be careful to:

- Make sure that

"cogOutput"=true - Make sure the evalscript returns only single-band outputs

- Keep

sampleTypein mind, as the values the evalscript returns when creating a collection will be the values available when making a request to access it

Regardless of whether the user specifies an existing collection or requests a new one, processed data will still upload to the users S3 bucket, where they will be available for download and analysis.

Processing results

The outputs of a batch processing will be stored to your object storage in either GeoTIFF (and JSON for metadata) or in Zarr format.

GeoTIFF output format

GeoTIFF format will be used if your request contains the output field. An example of a batch request with GeoTIFF

output is available here.

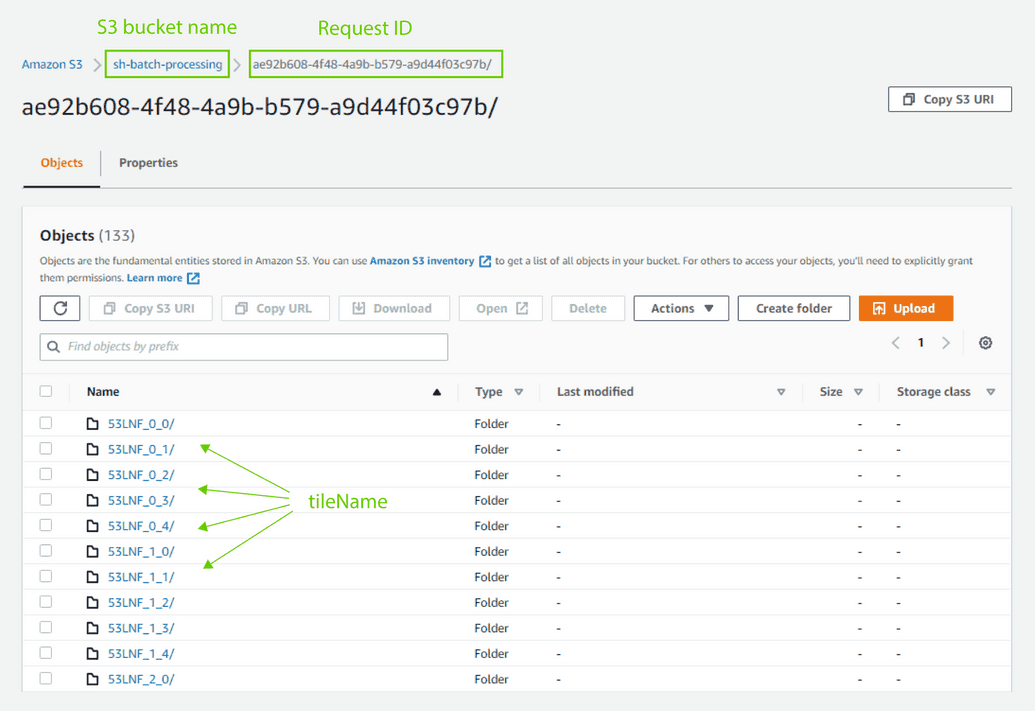

By default, the results will be organized in sub-folders where one sub-folder will be created for each tile. Each

sub-folder might contain one or more images depending on how many outputs were defined in

the evalscript of the request. For example:

You can also customize the sub-folder structure and file naming as described in the defaultTilePath parameter

under output

in BATCH API reference .

You can choose to return your GeoTIFF files as Cloud Optimized GeoTIFF (COG), by setting the cogOutput parameter under output in your request as true. Several advanced COG options can be selected as well - read about the parameter in BATCH API reference.

The results of batch processing will be in the projection of the selected tiling grid. For UTM-based grids, each part of the AOI (area of interest) is delivered in the UTM zone with which it intersects. In other words, in case your AOI intersects with more UTM zones, the results will be delivered as tiles in different UTM zones (and thus different CRSs).

Zarr output format

Zarr format will be used if your request contains the zarrOutput field. An example of a batch request with Zarr output is available here. Your request must only have one band per output and the application/json format in responses is not supported.

The outputs of batch processing will be stored as a single Zarr group containing one data array for each evalscript output and multiple coordinate arrays. By default, the Zarr will be stored in the folder you pass to the batch processing api in the path parameter under zarrOutput (see BATCH API reference). The folder must not contain any existing Zarr files. We recommend using a placeholder <requestId> as explained in the API reference to keep the results of your processing better organized.

The results of batch processing will be in the projection of the selected tiling grid. The tiling grids where output CRS is UTM zone are not supported for Zarr format output.

Batch deployment

Batch is available on two AWS regions: AWS EU (Frankfurt) and AWS US (Oregon). The Batch API endpoint depends on the chosen deployment as specified in the table below.

| Batch deployment | Batch URL end-point |

|---|---|

| AWS EU (Frankfurt) | https://services.sentinel-hub.com/api/v1/batch |

| AWS US (Oregon) | https://services-uswest2.sentinel-hub.com/api/v1/batch |

AWS bucket settings

Bucket region

The bucket to which the results will be delivered needs to be in the same region as the batch deployment you will use, that is, either eu-central-1 when using EU region (Frankfurt) or us-west-2 when using US region (Oregon).

Bucket settings

Your AWS bucket needs to be configured to allow full access to Sentinel Hub. To do

this, update your bucket policy to

include the following statement (don't forget to replace <bucket_name> with your actual bucket name):

{"Version": "2012-10-17","Statement": [{"Sid": "Sentinel Hub permissions","Effect": "Allow","Principal": {"AWS": "arn:aws:iam::614251495211:root"},"Action": ["s3:*"],"Resource": ["arn:aws:s3:::<bucket_name>","arn:aws:s3:::<bucket_name>/*"]}]}

Tutorials and Other Related Materials

Watch our webinar on batch processing, where you will learn how to process large amounts of satellite data step by step. The webinar will show you how to process and download data, create a collection and access it using processing API. June 23, 2021

To learn more about how batch processing can be used to create huge mosaics or to enhance your algorithms, read the following blog posts:

- How to create your own Cloudless Mosaic in less than an hour, November 3, 2020

- Scale-up your eo-learn workflow using Batch Processing API, September 17, 2020

- Large-scale data preparation — introducing Batch Processing, January 7, 2020